Powerful hardware and software has made new options available to try new Linux operating systems. It used to be difficult to try Linux on your desktop. A separate machine used to be required to try a new OS. Partitioning of disks and installation of boot loaders risked existing OS installations (and your data) when trying a new one. While using a single OS with a desktop, laptop or mobile device is still easier and preferred it is often no longer a requirement.

The default boot loader of many Linux distributions (grub2) now allows loopback filesystem mounting of CD or DVD image or “iso” files. If the Linux kernel inside the iso file supports this type of booting, grub2 can boot a machine from a CD or DVD image without requiring the physical CD or DVD. This is great for trying new systems.

To try any Linux or Windows operating system VirtualBox allows a whole hardware system to be emulated inside your existing (usually Mac or Windows, sometimes Linux) desktop or laptop operating system.

Modern server hardware provisioning has also been extremely virtualized using new Infrastructure as a Service (IaaS aka. cloud) computing systems like openstack through places like trystack and amazon. On top of these infrastructures Platforms (PaaS) and Software (Saas) are now commonly offered.

In recent years containers and related software technologies have become increasingly used by very large websites to bundle the complicated software dependencies together so that the same software can be developed on laptop hardware then moved and deployed on server hardware. These containers are portable and leaner than emulating a whole virtual machine which IaaS has focused on. Desktop computers and desktop operating systems exist to run software applications. Server computers and server operating systems exist to run increasingly complicated software applications built from software that depends on quickly changing other software.

Docker is a great implementation of containers. Developed to gain benefits analogous to physical steel shipping containers that ship goods using container ships, trucks and trains, docker provides for the transport of quickly changing server software bundles that work together. The separation of development from operations while using an identical container is a significant step forward for both groups of computer professionals.

Several new tools like vagrant and boot2docker provide wrappers around virtualbox to make virtualization in general and virtualbox in particular even easier to use when developing these quickly changing server software bundles. While containers have been around for a long time, in 2014 LXC containers in the Linux Kernel and Docker were both declared ready for production by the people developing them.

Software runs on hardware. Hardware is constantly changing and getting faster. Gordon Moore, a co-founder of Intel Corporation, predicted the exponential change, a doubling every 18 months that the industry has followed fairly consistently. His prediction was for the size of transistors but many factors have changed at approximately the same rate of change. Computers use their CPU, random access memory (RAM, short term memory), disk storage (hard disk or now more common, solid state, long term memory) and network to do interesting things. We interact with computers through their screens (sometimes touch enabled), mice, keyboards, printers and other (sometimes USB) devices.

Networks are part of the hardware we use. The Internet is the largest, most amazing collection of networks ever. It’s adoption by the public beginning in the late 1990s has directly and indirectly changed how we all work and live together. Networks can travel over fiber optic cables, copper wires or wireless Wi-Fi mediums. The 802.11 standards specify how these mediums are used.

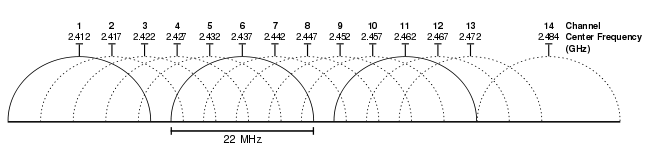

Wi-Fi standards have evolved quite a bit. The speeds keep getting faster. The frequencies specified by 802.11 standards allow all these little radio transceivers to inter-operate fairly well. The dynamic nature of Wi-Fi has and mobile devices has radically altered the assumptions behind implementing network software that previously assumed very few changes. Default access point settings are often not sufficient to get the best performance from a wireless network and can not predict the environmental factors and interference that may be present where they are deployed. There are also now several different frequencies (2.4 GHz and 5GHz), each with channels. Newer devices built using newer standards are supposed to be completely backwards compatible. Most commonly seen Wi-Fi standards are 802.11b/g/n with 802.11ac devices now available.

Wi-Fi standards have evolved quite a bit. The speeds keep getting faster. The frequencies specified by 802.11 standards allow all these little radio transceivers to inter-operate fairly well. The dynamic nature of Wi-Fi has and mobile devices has radically altered the assumptions behind implementing network software that previously assumed very few changes. Default access point settings are often not sufficient to get the best performance from a wireless network and can not predict the environmental factors and interference that may be present where they are deployed. There are also now several different frequencies (2.4 GHz and 5GHz), each with channels. Newer devices built using newer standards are supposed to be completely backwards compatible. Most commonly seen Wi-Fi standards are 802.11b/g/n with 802.11ac devices now available.